We will cover:

- What is search intent and why does search intent matter for SEO?

- Problems arising from not understanding search intent

- Existing software approaches (TF-IDF)

- A market driven approach to optimisation with two types of search intent errors

- Visualising intent and Predicting the optimal taxonomy and interlinking structure

- What the experts say

- Conclusion

What is search intent and why does search intent matter for SEO?

Search Intent is literally the intention behind the search query. People use different search queries even though they seek to satisfy the same intent, therefore search intent usually has a 1 to many relationship to keywords

The reason why search intent matters is that the goal of SEO is to ensure the content ranking for the search query has the highest statistical probability of satisfying the desire of the user, which could be:

- Making a purchase or enquiry

- Searching for something else as a follow up

- Or nothing, the search has been satisfied.

Therefore SEOs would want to ensure that sites only have dedicated pages matching the search intent. For example, someone searching for ‘black hoodie’ and ‘mens hoodies’ could have the same search intent by and large, therefore the site should have a single page optimised for the search query instead of having two separate content pages.

Getting this right means:

- Better conversion rates – the user sees an image of black hoodies on the page and knows they’re on the right page without any need to navigate anywhere else.

- Higher rankings because the site has consolidated the two the 2 URLs onto a single page increasing the page level authority. But, also the page is doing a better job of satisfying the user query compared to other ranking pages that haven’t optimised for the search intent.

Problems arising from not understanding search intent

If a site doesn’t address or indeed optimise it’s architecture toward search intent, it’s likely to suffer from the following symptoms:

Florida 2 – If your site’s search intent is 2 sigmas (standard deviations) from Google’s internal intent-content alignment threshold in your market then you’re likely to have lost out in the recent Florida 2 Google algorithm update (1st March 2019) or your chances of ranking high in this era of SEO and going forward are severely reduced.

Content overload – where a single URLs has so much content that it ranks for multiple intents thereby weakening it’s rankings.

Cannibalisation of rankings – where multiple URLs of the same site rank for the same query beyond page 1. Cannibalisation on Page 1 is not such a problem as it yields more share of voice and is probably more to do with Google not being able to clearly discern what content will satisfy the user query. All users using the same query are likely to be made of multiple audiences which could also explain why.

Lower conversion rates – because the user has more work to do by navigating through the site or bouncing out to find other ranking sites that present better content.

Less Page Authority – as a result of lower rankings due to weaker page level authority and inferior content.

Existing software approaches (TF-IDF)

Term Frequency – Inverted Document Frequency (TF-IDF) is currently the algorithm of choice for enterprise software platforms like BrightEdge and Searchmetrics, for determining the importance of that word in a document (or in the SEO use case, a web page). What is it? TF-IDF essentially takes the product of the most repeated words (TF) in a document for a given word, and the is the inverse proportion of web pages containing that word within the website (IDF). The result is a weighting showing the words importance for a given web page.

Software attempts to solve search intent, by helping users map new keywords to existing pages, using TF-IDF. This would work by seeing which web page has the highest TF-IDF score for that new search query and therefore recommending the mapping.

A number of advantages include the fact that it can be used across many different languages and that it’s very easy to scale.

The downside with this approach is that it doesn’t take into account what the user or the market thinks about a product or whatever it is searching for.

For example, you may have a furniture store and a page on coffee tables. Then from your keyword research, you decide that “side table” would be a good search term to optimise for. The problem there is that using TF-IDF, you’re likely to be recommended creating a new landing page as the word “coffee” and “side” have nothing in common from a pure mathematical perspective.

A market driven approach to optimisation

We believe a better approach is one that makes use of consumer psychology and cultural norms. This works by comparing the similarity between the mappings of the top ranking content keywords to URLs i.e. comparing the search engine results of two keywords and seeing how much overlap the content of the ranking pages overlap. If there is sufficient overlap then the two keywords share the same search intent – if not then the keywords should be optimised on different URLs. This market driven approach is dealt with at scale by the Intent API.

There are two cases of search intent errors:

Case 1 – Content Overload: One Page, Multiple Intentions

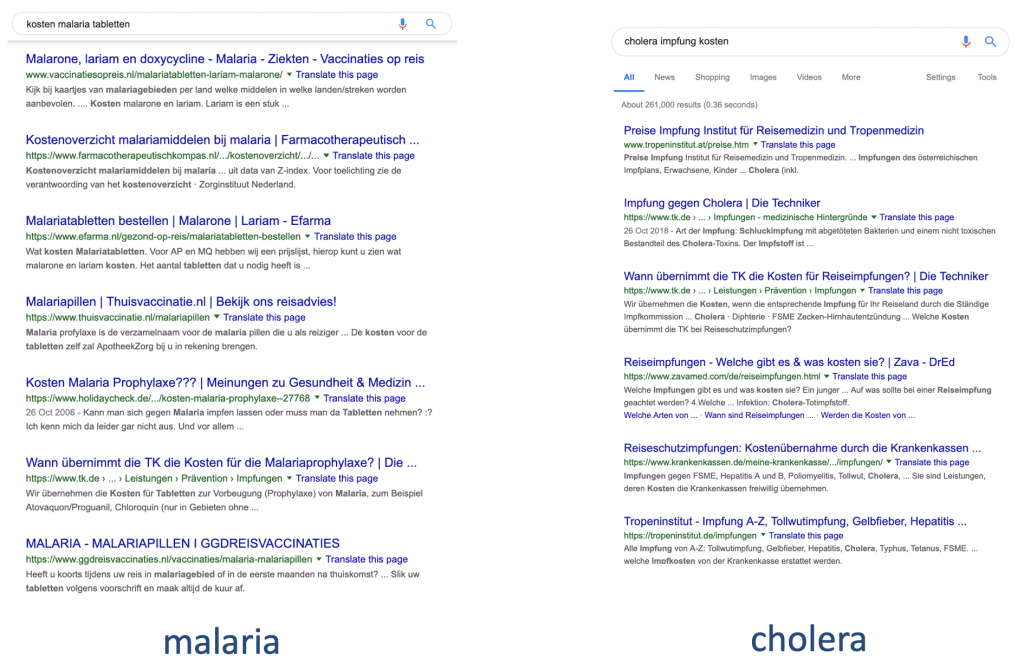

This is where one page has so much content it ranks for a number of search intentions because it has too much content. Take the two Google DE results for cholera and malaria tablet cost queries:

The results above lack similarity. If we look in more detail, we can see that why:

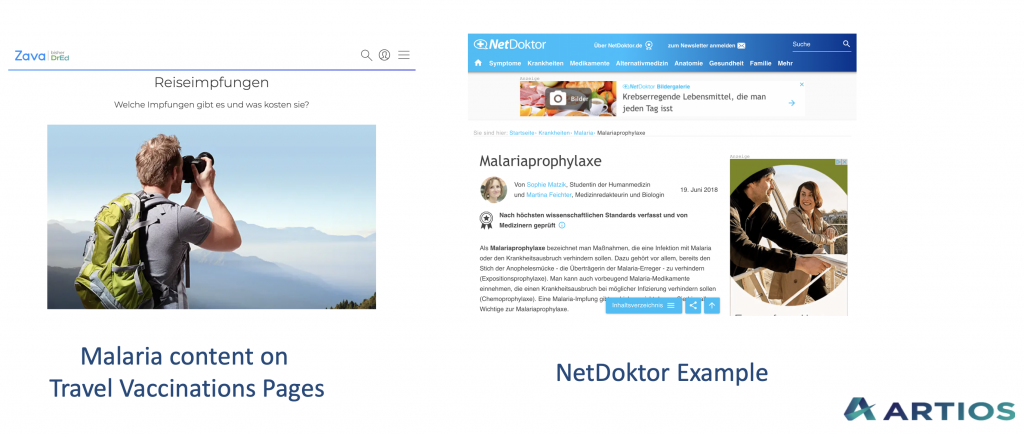

The site on the left has content optimised for travel vaccinations, while the site on the right has a dedicated landing page. The answer in this case would be to migrate the malaria tablet cost content to a new or existing landing page for Malaria tablets.

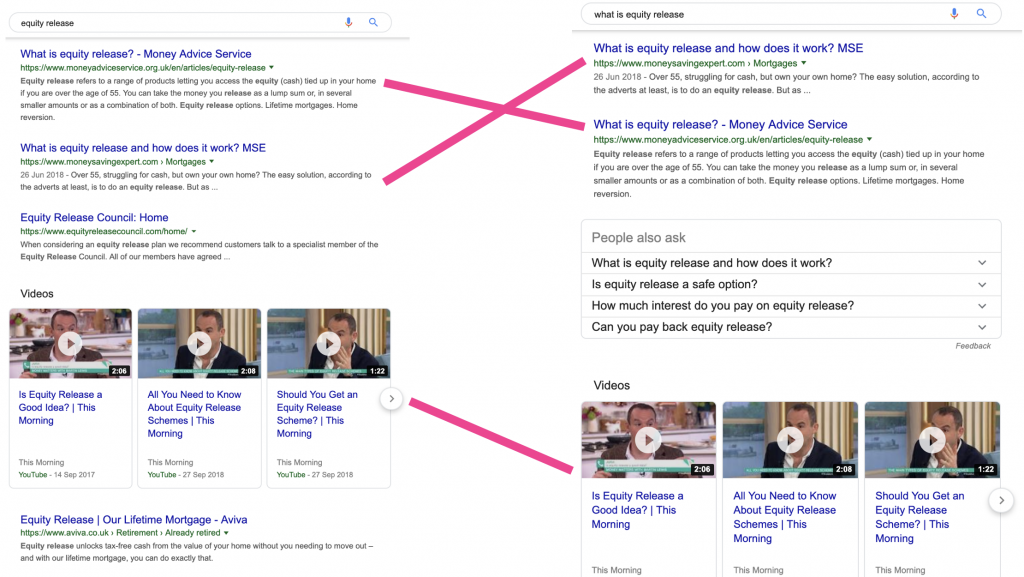

Case 2 – Cannibalisation: One Intention, Multiple URLs

This is where one keyword SERP shows multiple URLs from a site ranking outside the top 10. If there are multiple URLs ranking inside the top 10 then it’s okay as it’s more likely that the search engine is unsure about the user intent. Outside the top 10, it’s more likely that the content that would satisfy the search intent is spread across 2 or more URLs, take the following:

The content ranking in the results have enough similarity in the top 5 – look at the words in the titles which are largely shared. This means the two keywords have the same search intent. Looking deeper into the content:

The 3 pages on the left, despite being different keywords share the same search intent and should be merged onto the highest ranking web page.

Mathematical Evidence

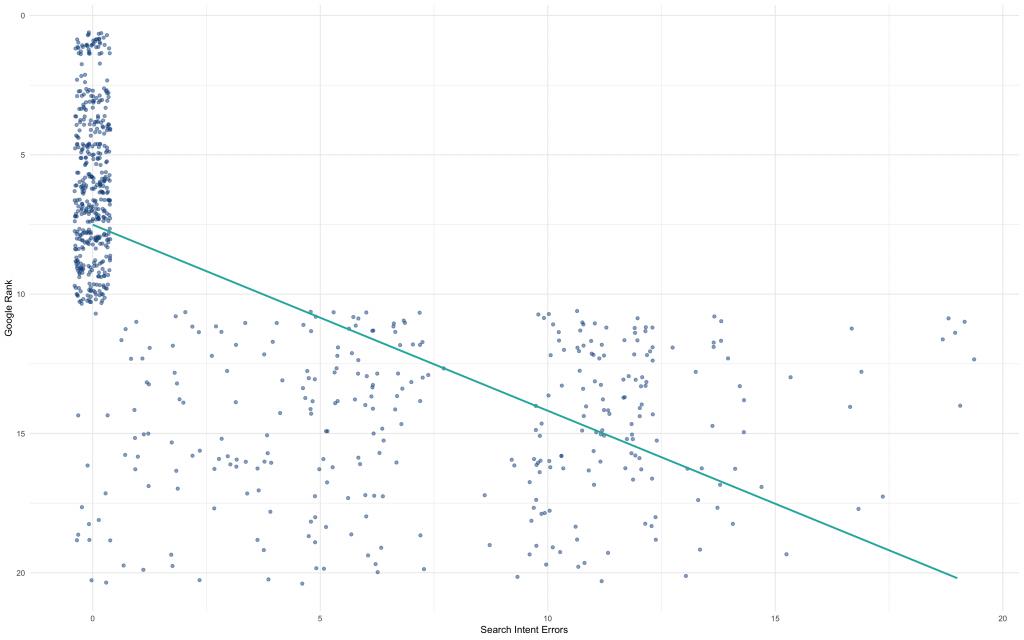

That’s all very well, but what is the evidence that any of this will improve rankings? If we model the rankings by search intent, we get the following beta values for the regression model:

> coef(intent_mod) (Intercept) m_ints m_urls 6.415069 1.150652 4.684908

The p-values for all 3 model components are less than 0.01 which means that both types of search intent are statistically significant threshold of 99%. i.e. it is less than 1% that you will observe a difference in ranking due to multiple URLs or intentions by random chance!

Visualising the model data we see the following:

Naturally, there are no search intent errors in the top 10 as you observe instances where multiple URLs rank for the same search query. As mentioned earlier, it’s more likely to be a case of Google not knowing what type of content will likely best satisfy the user.

Visualising intent and Predicting the optimal taxonomy and interlinking structure

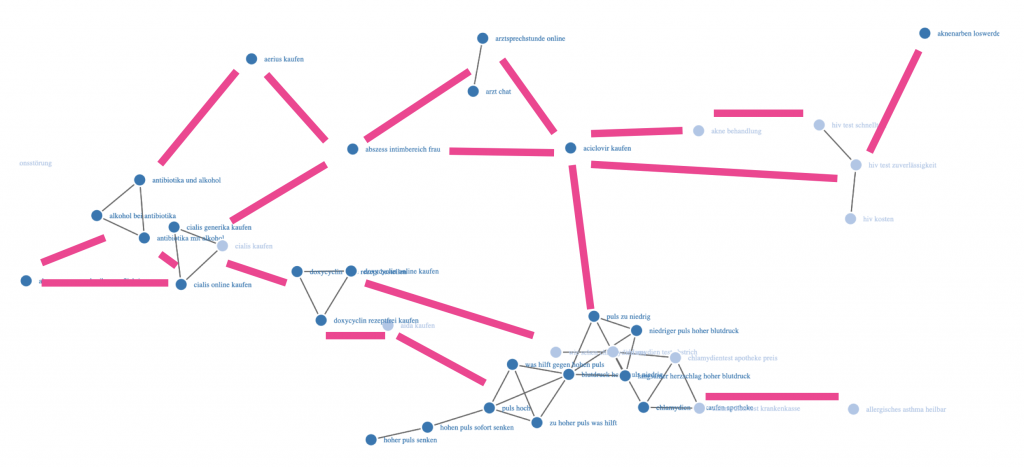

Using the API output we can display how the keywords are or aren’t connected in terms of search intent:

Taking it a step further, if we knew the distance between clusters of search intent, then not only could we see which keywords belong to each other, we could also see how far apart the different search intents are.

This would allow us to move away from a site organised like a shopping catalogue to one that is structured according to how users naturally search for information. That means we can decide how the different pages should be interlinked or weight the links of content in the site towards an intention map as shown above.

Conclusion

Search Intent is literally the intention behind the search query. The reason why search intent matters is that the goal of SEO is to ensure the content ranking for the search query has the highest statistical probability of satisfying the desire of the user. Getting this right means the site achieves higher conversion rates and higher rankings.

If a site doesn’t address or indeed optimise it’s architecture toward search intent, it’s likely to suffer from Florida 2, Content Overload, Rank Cannibalisation, lower rankings and conversion rates.

Term Frequency – Inverted Document Frequency (TF-IDF) is currently the algorithm of choice for enterprise software platforms, attempts to solve search intent, by helping users map new keywords to existing pages.

We believe a better approach is one that makes use of consumer psychology and cultural norms. This works by comparing the similarity between the mappings of the top ranking content keywords to URLs i.e. comparing the search engine results of two keywords and seeing how much overlap the content of the ranking pages overlap. If there is sufficient overlap then the two keywords share the same search intent – if not then the keywords should be optimised on different URLs.

There is mathematical evidence from modeling the rankings by search intent, where the p-values for all 3 are less than 0.01 which means that both types of search intent are statistically significant threshold of 99%. i.e. there is less than 1% chance that you will observe a difference in ranking due to multiple URLs or intentions by random chance!

Using a market led approach to determining search intent could also help SEOs see how far apart the different search intents are, moving sites that are structured according to how users naturally search for information.